Learning-based Single View to 3D

- Here, I designed a Single view to 3D reconstruction pipeline, for image-to-voxel, image-to-mesh, and image-to-point cloud generation

- I achieved an F1-score of 0.88 for the point cloud reconstruction and also extended the network for occupancy queries

Single View to 3D reconstruction is a recent research topic in 3D vision. This involves building neural networks that are capable of producing a 3D representation of an object provided in an image. Thid project deals woth these kind of networks. I built three different models, aimed for image-voxel, image-mesh, and image-point cloud reconstruction. This project has been implemented in Python3 and uses packages - PyTorch, PyTorch3D, NumPy, and Matplotlib. This project was implemented as a part of my Spring 2023 course at CMU, Learnig for 3D Vision, taught by Prof. Shubham Tulsiani.

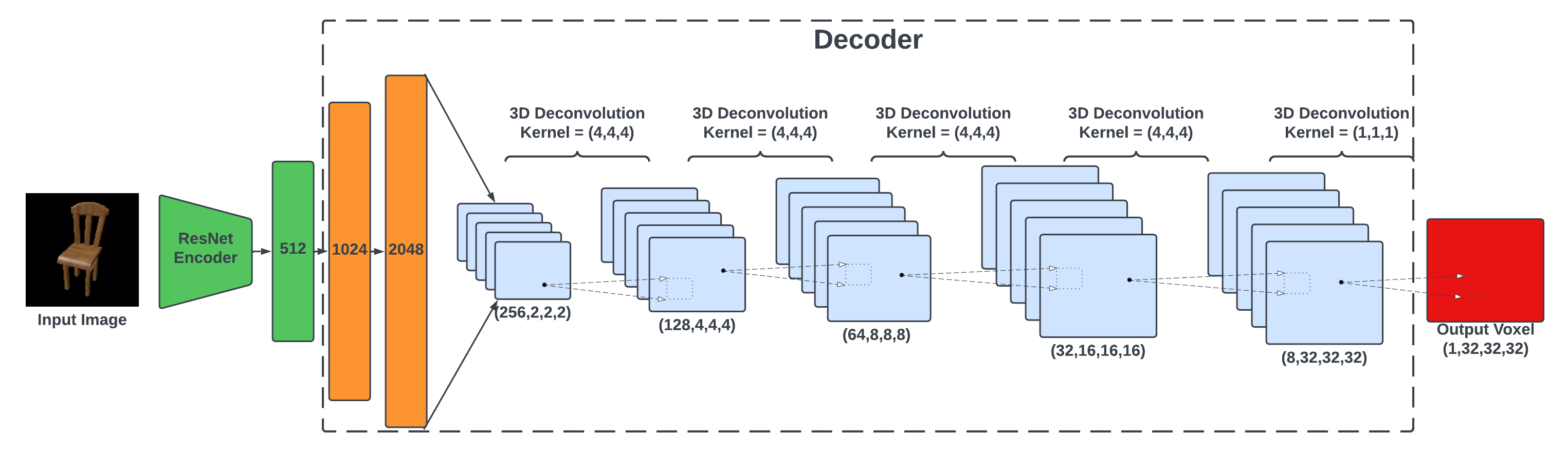

Image to Voxel

The neural network used for this part can be seen in the image below. An input image is fed through a ResNet Encoder and an output 1D embedding of size 512 (shown in green) is recieved. This is passed through the decoder, which starts with an MLP (shown in orange). The output of this MLP (size 2048) is unflattened into a tensor of size (256,2,2,2). This is fed into multiple 3D deconvolutional layers, which give ouput a voxel grid of size (32,32,32) at the end (shown in red). Each of the MLPs and the deconvolutional layers, except the last one, have a Relu activation and BatchNorm in front of them.

The model was trained on multiple images of multiple chair images for ~5000 epochs. The results can be seen below

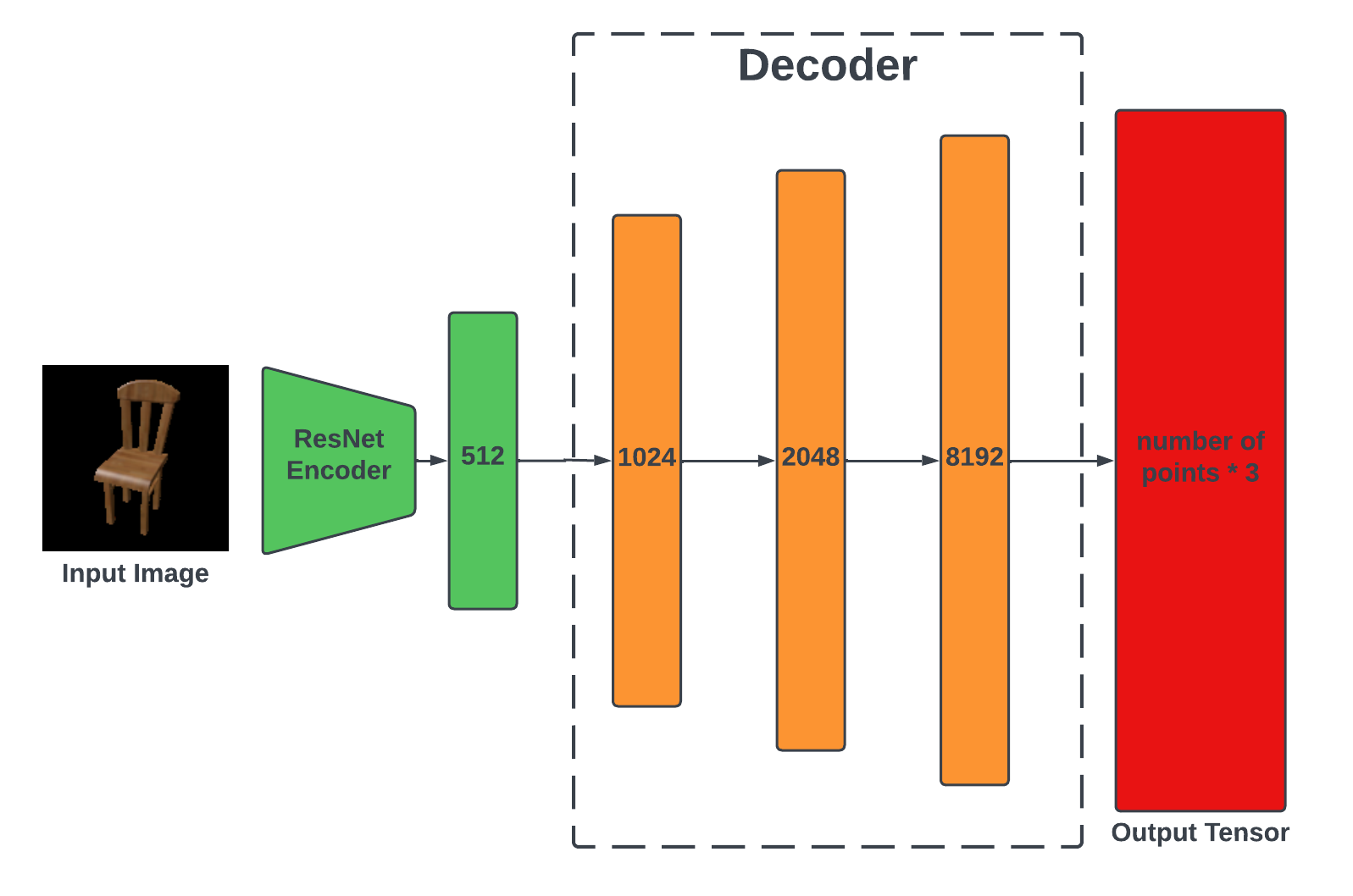

Image to Point Cloud

The model used for this part is shown below. The initial structure of the decoder for this case is similar to the previous one, the voxel case. We use a ResNet Encoder and then put a multi-layer perceptron (MLP) as the decoder. The output of this decoder is a tensor of size number of points X 3. This ouput is then resized to (number of points , 3). The reason for choosing an MLP instead of a deconvolutional layer is that point clouds are of discrete nature, rather than the continuous nature of voxels.

The training was for this model was similar to that for the voxel case, with multiple chair images and ~5000 epochs. The results can be seen below.

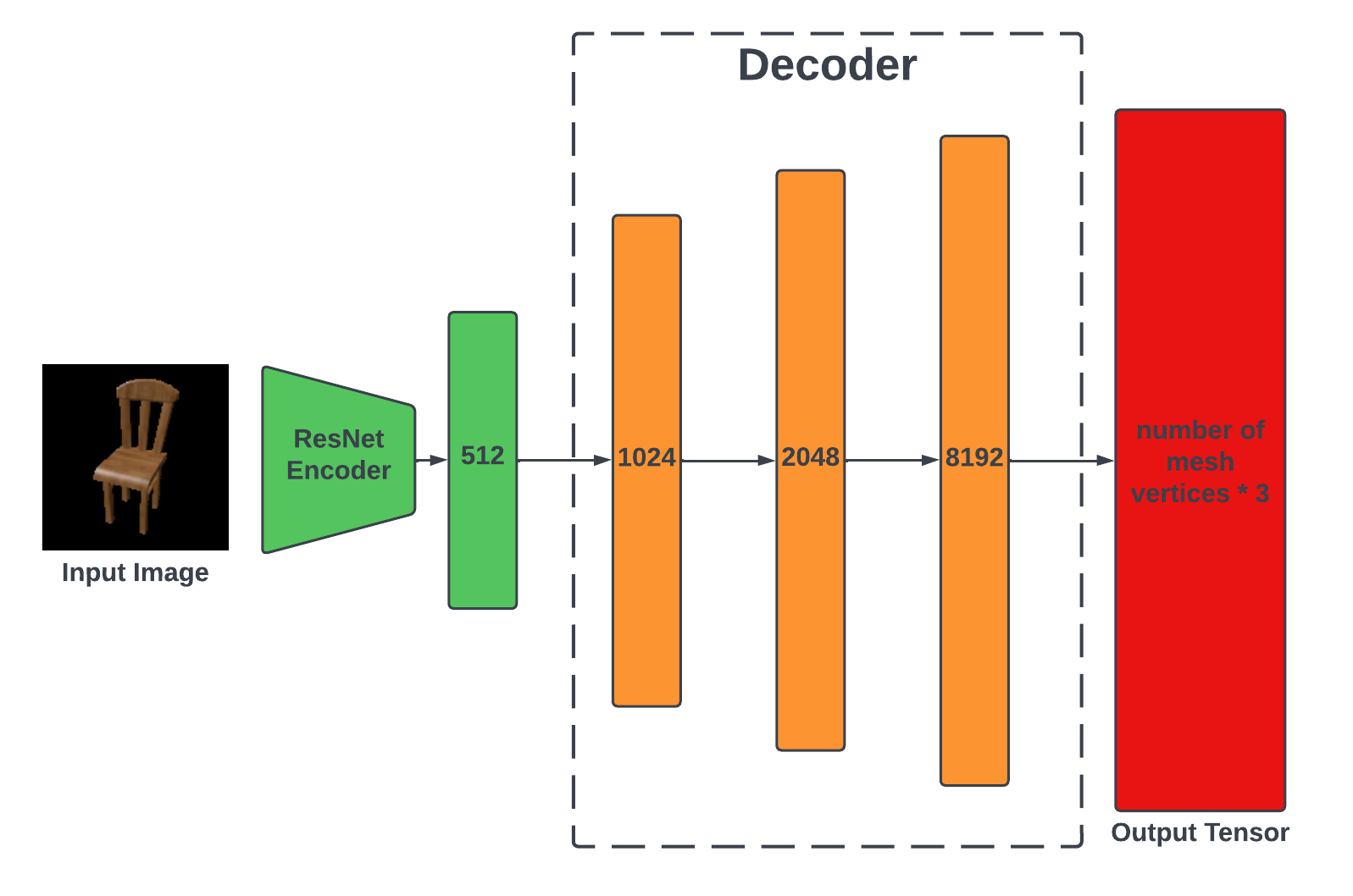

Image to Mesh

The model used for this case is same as the one used for point cloud case, with the exception of the output tensor now being number of mesh vertices X 3. The output is resized to (number of points , 3) and then converted into a mesh using PyTorch3D.structures.Meshes.

The training was for this model was similar to that for the voxel case, with multiple chair images and ~5000 epochs. The results can be seen below.

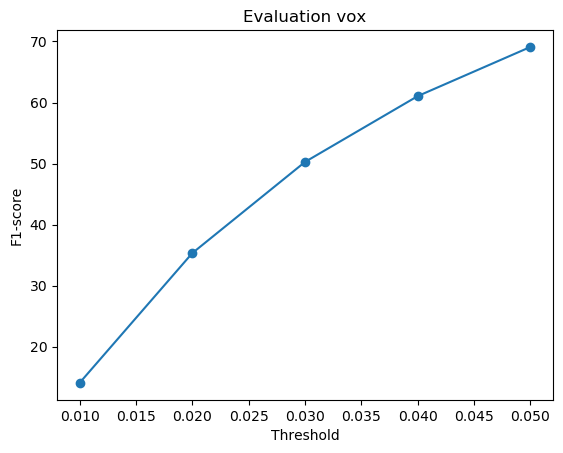

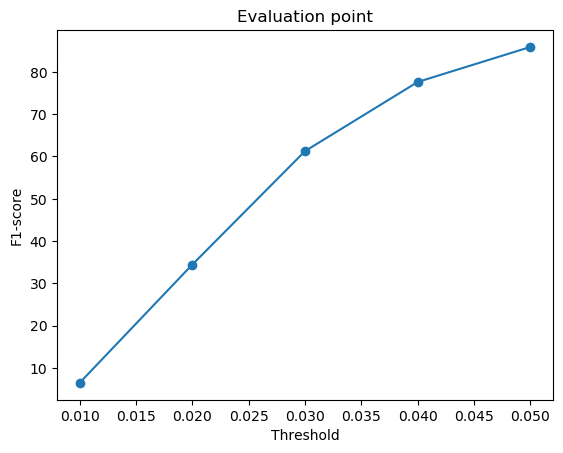

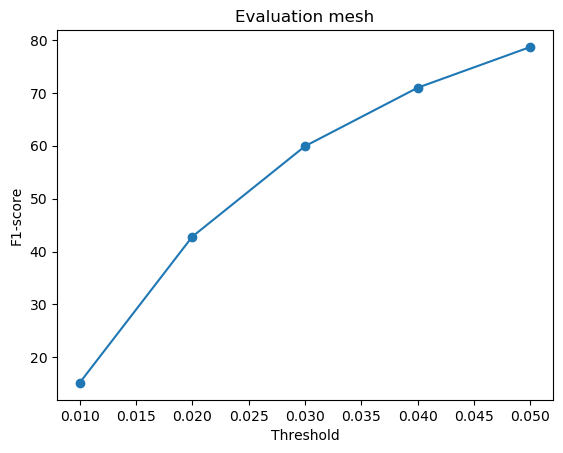

Quantative Results

The quantitativce results of the models acn be seen below. Specifically, it shows the results of the F1-score for the 3 models at different thresholds. Left-to-Right the figures are for Voxels, Point Clouds, and Meshes.